Learning With Errors

1. What is LWE?

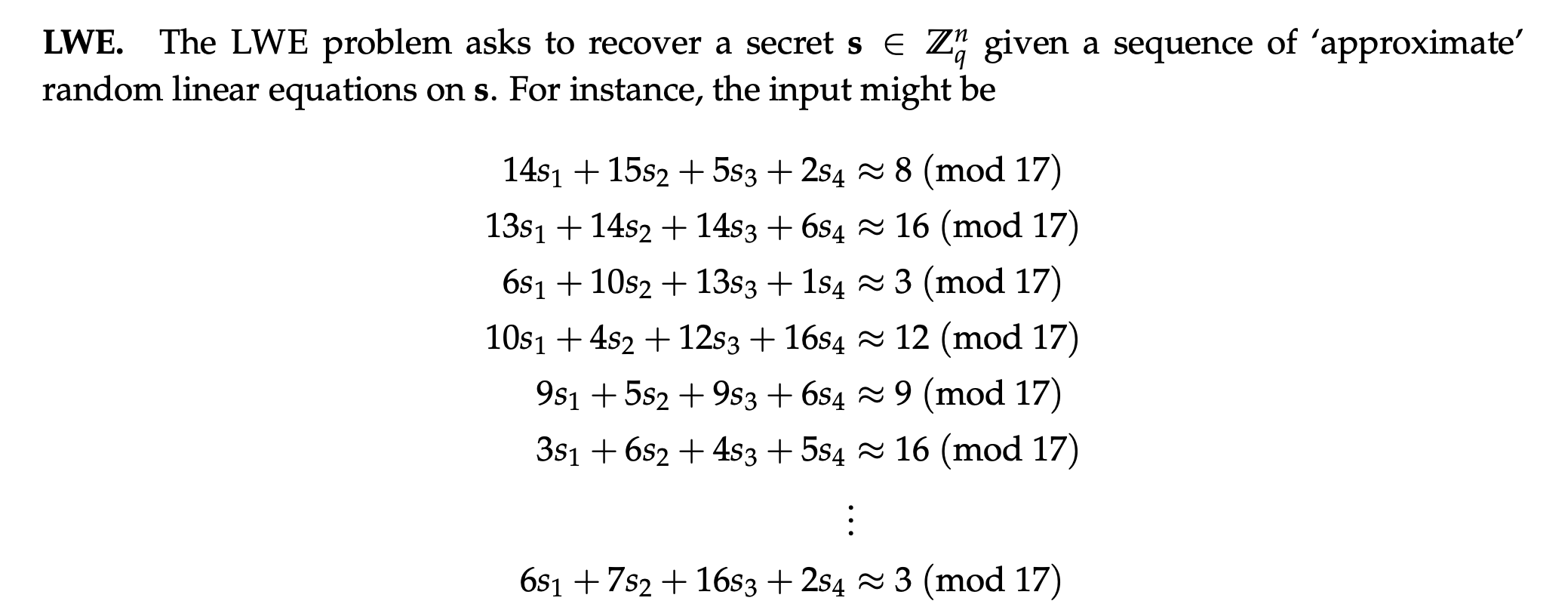

Here Oded Regev gives a definition of LWE :

2. Get started

linear system of equations:

if we want to get the value of $x_1, x_2, x_3$, we can use Gaussian Elimination to solve this problem. Or we can just use matrix to represent this problem:

LWE settings:

How about add an small error term $e?$

This time, we get:

Or in other words, we can represent it in matrix style where $e$ is the error term:

Then now we put the equations into finite field:

Then we need to recover the secret $x$.

General situation:

As the same, we need to recover $s.$ Where $e$ is small.

Then from this equation we can construct a lattice $L$ that satisfies:

Our target is to recover secret $s.$

Solve LWE

Step 1: LLL algorithm

First we apply LLL algorithm to lattice $L$ to get a good basis.

Step 2: Solve CVP using Babai’s algorithm

We know that there is a close vector named $b$ that belongs to $L$ and is close to $a$ because $e$ is small which is a CVP. So we can solve for $b$ using Baibai’s algorithm to get rid of the error term $e$ which is:

so we just need to solve this equation over the ring mod p.

Example code

1 | def Babai_closest_vector(L, w): |

Solve LWE by solving SVP

After participating in 天融信杯 I have searched a lot of papers to find how to solve LWE problem in a faster way. So it comes a method called Embedding method to convert CVP to SVP:

Always, we set $M=1$ to increase the chance that BKZ will return the correct answer.

Also there will be some optimization to help us solve LWE.

Conclusion

Solving LWE problem is NP hard which can be reduced to CVP, SVP . So is we wanna solve LWE we mush solve CVP, SVP first or we must solve NP problem. In other words, we can make lattice reduction algorithm faster to help us solve LWE much more easily. Low dimention only!!